Simulating a Planet on the GPU: Part 1

I miss Sim Earth.

An epic tale from one of the Internet’s finest, LGR

To be fair, it didn’t go anywhere. You can still boot up a copy, mess around with evolution, atmospheric conditions, and continental drift like it’s 1990… but it’s not 1990. We have 32 years of computing advances at our backs and yet no SimEarth 2. Sure there’s WorldBox and Worlds and a bunch of awesome planet generation projects, (1, 2, 3) but none allow me to play with plate tectonics, currents, or any of the “deep” factors underlying how the Earth really works.

Sometime last year, I decided against writing Will Wright a letter, begging him to spend hundreds of hours making a SimEarth 2. Instead, I did what any good programmer would do and decided to devote thousands of my own hours into the same thing. This blog describes my first step toward such a goal: describing well over a year’s worth of work and the many detours taken along the way. While there’s a long way to go from here, I’m very happy to share my progress to this point, and promise to follow up with a “part 2” at some future date.

Polygon-Based Approaches

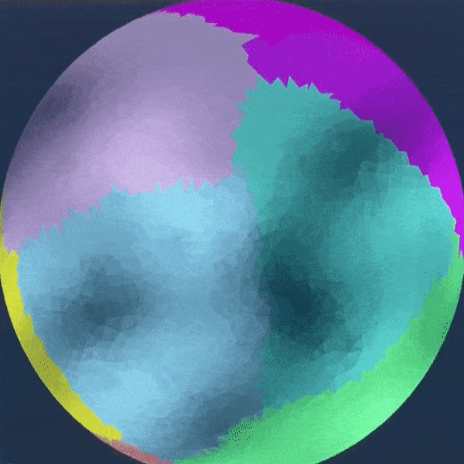

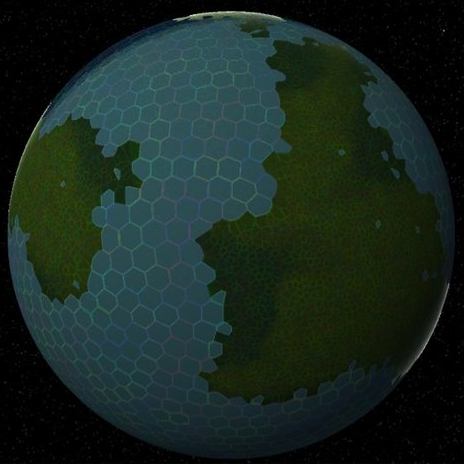

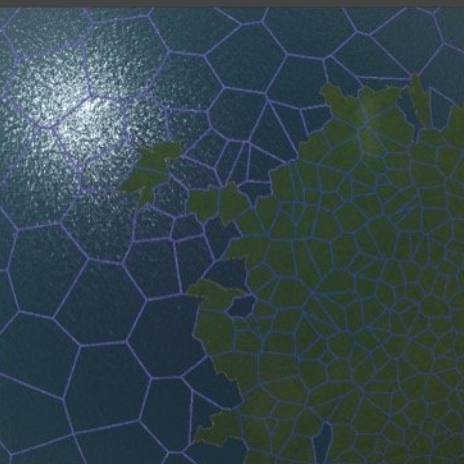

My first dozen or so approaches at realistic world generation involved generating polygons on a sphere, using Delaunay Triangulation and Voronoi Tessellation. These fancy-sounding processes allow us to cheaply turn a 2D surface (or indeed, spheres, thanks to this amazing blog) into a number of polygons for representing geographic features. Once I wrapped my head around wrapping Voronoi polygons around the North and South Poles, I managed to get something vaguely passing as a planet generated, and applied a nice water shader.

The fundamental issue with a polygon-centric approach is one of tectonic plate realism: plate collisions require an incredible number of polygons to model correctly. Increasing the number of polygons in a Unity & C# environment proved prohibitively expensive, so I turned to an old favorite: C++. After finding Brendan Galea’s excellent YouTube channel and building the first of the three demos above in SDL2 & Vulkan I realized what a titanic task writing a custom engine for this project would be, and went back to the (Unity) drawing board.

Cubemap-Overlap-Based Approaches

Frustrated with the poor performance of my polygonal approach, I began to research Unity’s performance optimization tools. I chunked up and parallelized the Voronoi tessellation to no avail. I tried rearranging memory to no avail. Clearly, something about doing this amount of math on the CPU was beyond current processing capabilities… but what about the GPU?

Like most programmers, I’d heard of the legendary power of GPUs, but had only really harnessed it via graphics programming or CUDA (for my Music ex Machina project). While I had some experience writing conventional shaders, learning how to write compute shaders seemed like a massive undertaking… but what option did I have? I had no room left on the CPU.

Put simply, compute shaders are capable of applying a GPU’s heavily-parallelized workflow to arbitrary data, meaning I could simulate a world full of tectonic plates one “pixel” at a time. Once I figured out how to represent potentially world-spanning plates as cubemaps, I managed to create a neat compute shader-based simulation with plates colliding, subducting, and emerging from seafloor spreading… but never deforming.

While I liked the direction this adventure in compute shaders had taken me, I needed some new technique which could realistically deform crust at convergent plate boundaries.

Smoothed-Particle Hydrodynamics

Inspiration can come from anywhere, and as luck would have it, the inspiration for my next step forward came from watching this physical simulation of a plate boundary. The use of sand for crust material makes realistic deformation possible… and reminded me of the falling sand games which were so popular when I was a kid. Could I simulate little bits of “crust” which could bump into one another, forming mountains and valleys? Could I use the same system for air and water?

After a little digging, I discovered Smoothed-Particle Hydrodynamics, a technique commonly used in place of cellular fluid simulations. If I could just implement SPH on a sphere using my newfound knowledge of compute shaders, I’d have the fundamentals of a truly-unique planet sim. With a clear implementation strategy in mind, how long could implementing it possibly take?

As it turns out, quite a while.

Writing compute shaders is difficult, as is debugging and profiling them. I found myself performing true computer “science” on multiple occasions: forming a hypothesis about the performance impact of some new algorithm on the GPU and verifying it through good, old-fashioned experimentation. I learned a great deal in the process, most notably that computation is cheap and memory is expensive! More in depth, I’d highly recommend this incredible talk on compute shader performance optimization. I owe much of this project’s success to that talk.

What’s Next?

This project has proved one of the most difficult I’ve ever worked on, and yet, one of the most satisfying. I hope to build a great deal on this foundation, slowly approaching a fully-featured planetary simulation. Here are a few thoughts as to what might be next:

Create map modes to display the direction of the currents (at present you can only see the waves moving in the right direction)

Show the air currents in some clever way, perhaps with a moving cloud layer on top of the planet

Allow the wind to pick up moisture from warm bodies of water and precipitate it back on land… including rain shadows caused by mountain ranges

Allow currents to warm up the surrounding air, causing phenomenon like the North Atlantic Current's miraculous warming of Europe.

Add hotspots, volcanoes, and a variety of rock types

Optimize it to run on hardware beyond my Surface Book 2

Here’s to blog #2, with whatever new features that brings! Oh, and looking for a download link? Follow through to this page. Happy simulating : )